When it comes to optimizing website performance, A/B Testing is a powerful technique that allows you to make data-driven decisions. It involves comparing two or more versions of a webpage to determine which one performs better in terms of user engagement, conversions, and other key metrics. In this beginner’s guide to A/B testing, we will explore the fundamental concepts, methodologies, and best practices that will help you get started with this invaluable optimization tool.

Understanding A/B Testing

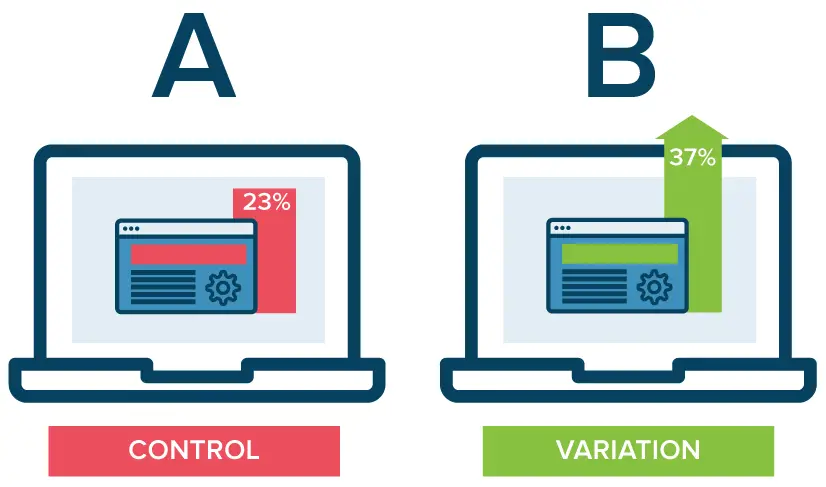

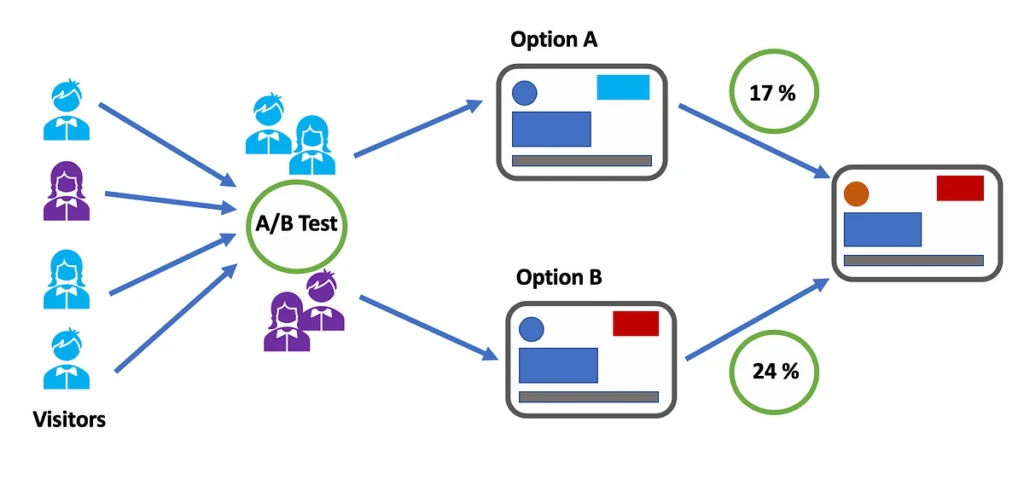

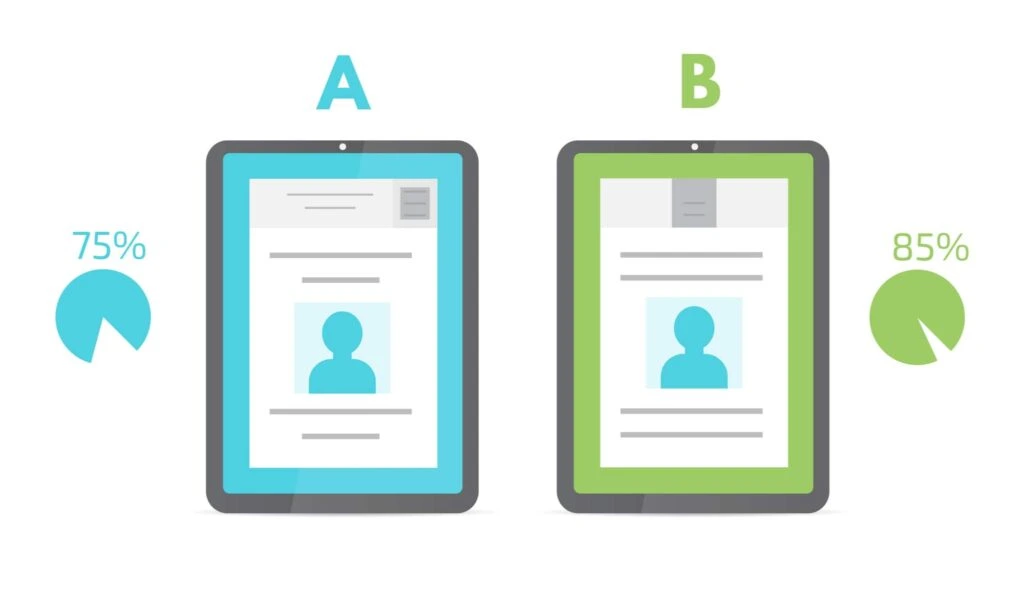

A/B testing, also known as split testing, involves splitting your website traffic into different groups and presenting them with variations of a webpage. One group sees the original version (control), while the other group sees a modified version (variant). By measuring the performance of each variant, you can gather valuable insights into user behavior and preferences.

Before embarking on an A/B testing campaign, it is crucial to define clear goals and metrics. What are you trying to achieve with your test? Is it increasing click-through rates, improving conversion rates, or reducing bounce rates? By setting specific and measurable goals, you can focus your efforts on the areas that matter most to your business.

Formulating A/B Testing Hypotheses

To conduct an effective A/B test, it is essential to develop hypotheses based on user insights and data analysis. A hypothesis states the expected outcome of a test and provides a direction for experimentation. For example, you may hypothesize that changing the color of a call-to-action button will increase conversions. By formulating clear hypotheses, you can design more targeted and meaningful experiments.

Designing Variations for A/B Testing

Once you have defined your goals and hypotheses, it’s time to create the variations for your A/B test. This involves making changes to elements such as headlines, images, layouts, colors, or even the entire design. It’s important to make only one change at a time to isolate the impact of that specific variation. This allows you to accurately measure its effect on user behavior.

Splitting Traffic and Sample Size

To ensure the validity of your test results, it’s crucial to split your website traffic evenly between the control and variant groups. This can be done using randomization techniques or tools specifically designed for A/B testing. Additionally, determining an appropriate sample size is important to achieve statistically significant results. Larger sample sizes reduce the likelihood of false positives or false negatives.

Implementing the A/B Testing

Once you have prepared your variations and set up the traffic splitting, it’s time to implement the test. This involves integrating the necessary code snippets or using an A/B testing platform that simplifies the process. Ensure that the test is correctly deployed across all relevant pages and devices to capture a comprehensive set of data.

After running your A/B test for a sufficient duration, it’s time to analyze the results. Look at key metrics such as conversion rates, engagement metrics, and other relevant data points. Use statistical analysis to determine the statistical significance of the observed differences between the control and variant groups. This will help you make data-driven decisions about the winning variation.

Iterating and Continuous Optimization

A/B testing is not a one-time activity but a continuous process of optimization. Even after obtaining successful results, there is always room for further improvement. Use the insights gained from one test to inform your next round of experiments. Iterate, refine, and continue testing to maximize the performance of your website.

Avoiding Common Pitfalls

When it comes to A/B testing, it’s important to be aware of common pitfalls that can affect the accuracy and reliability of your test results. By understanding and avoiding these pitfalls, you can ensure that your A/B tests provide meaningful insights and guide effective decision-making. Let’s explore these pitfalls in more detail:

- Testing Too Many Variations at Once: One common mistake is testing too many variations simultaneously. While it may seem efficient to test multiple elements at once, it can lead to diluted results and difficulty in pinpointing the cause of any observed differences. To address this, focus on testing one key element at a time, ensuring that each variation has a clear objective and hypothesis.

- Insufficient Testing Duration: A/B tests require sufficient data collection over a specific period to achieve statistical significance. Running tests for too short a duration can result in inconclusive or misleading results. On the other hand, running tests for too long can lead to resource wastage and delays in implementing successful changes. Determine an appropriate testing duration based on factors such as website traffic, conversion rates, and desired statistical confidence.

- Misinterpreting Results: It’s essential to interpret A/B test results accurately. Avoid making assumptions or drawing conclusions solely based on surface-level observations. Take into account the context, user behavior patterns, and any potential confounding factors that may have influenced the results. Be cautious of attributing success or failure to a specific change without a comprehensive understanding of the underlying factors at play.

- Implementation Delay or Neglect: A successful A/B test provides valuable insights that should be implemented to drive improvements. However, a common pitfall is delaying or neglecting the implementation of the winning variation. To maximize the impact of your testing efforts, prioritize the implementation of the winning variation promptly.

To navigate these pitfalls, approach A/B testing strategically. Develop clear hypotheses, establish measurable goals, and plan your experiments carefully. Follow best practices such as randomizing traffic, ensuring an adequate sample size, and conducting thorough statistical analysis. Regularly review and refine your testing processes, learning from past experiences to continuously optimize your A/B testing strategies.

By understanding and avoiding these common pitfalls, you can conduct effective A/B tests, make data-driven decisions, and optimize your website for better performance and user experiences. Stay mindful of these pitfalls throughout your testing journey to ensure the validity and reliability of your results.

Conclusion

A/B testing is a powerful technique that empowers you to optimize your website based on data and user behavior. By following the principles and best practices outlined in this beginner’s guide, you can embark on successful A/B testing campaigns and drive continuous improvements in your website’s performance. Remember, it’s not about guesswork or intuition but rather about making informed decisions backed by solid data. Start your A/B testing journey today and unlock the potential for growth and success.

Yes Web Design Studio

Tel. : 096-879-5445

LINE : @yeswebdesign

E-mail : info@yeswebdesignstudio.com

Facebook : Yes Web Design Studio I Web Design Company Bangkok

Instagram : yeswebdesign_bkk

Address : 17th Floor, Wittayakit Building, Phayathai Rd, Wang Mai, Pathum Wan, Bangkok 10330 (BTS SIAM STATION)